Monday 26th November, 2018

Colour analysis, Colour-scheme generators, Machine learning

Monday 19th November, 2018

Colour analysis, Colour-scheme generators, Machine learning

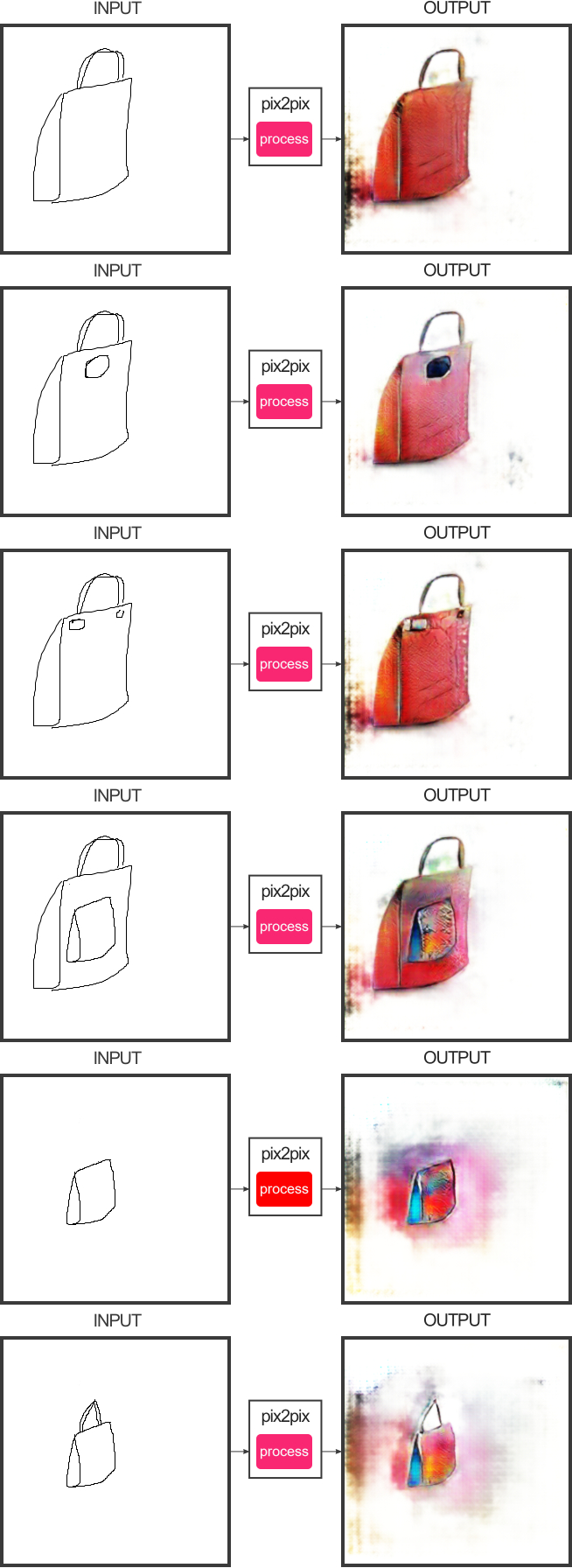

To be fair, there's very little about the bag that's mine, apart from its

outline. I

made the image by sketching a handbag in the "Input" box in the

"edges2handbags" section of

"Image-to-Image Demo Interactive

Image Translation with pix2pix-tensorflow", by Christopher Hesse.

Once I'd done so and pressed "Process", his software did the rest:

As well as handbags, Christopher Hesse's page allows you to generate shoes from sketches, cats from sketches (with gruesome results if you get it wrong), and buildings from facade plans. It's all based on Hesse's re-implementation of pix2pix, a rather wonderful piece of machine-learning software, which can be trained to carry out a variety of general-purpose — and hard — image transformations.

To train pix2pix, it must be fed with a database of pairs of images. With the handbags, shoes, and cats, the "output" image of each pair was a photo of a handbag, shoe, or cat. The other image in the pair, the "input", was a black-and-white "sketch" thereof, automatically generated by software that detects the edges of objects. Once pix2pix has been trained, it can take new inputs and generate outputs from them.

You can try this for yourself, at various levels. To try Christopher Hesse's generators, go to his page. He recommends using it in Chrome. I tried it in Firefox, and found that the browser kept popping up messages saying "A script is slowing down the page: do you want to kill it or wait?". (Obviously, one should then wait, not kill.) Typically, this would happen three or four times during each run. But the runs do eventually end, and then you get a new handbag you can admire, or a new cat you can run away from screaming.

Training pix2pix on new sets of images would be fun. At the moment, I think this still requires knowledge of programming: that is, there aren't yet systems that will allow you to (for example) click on loads of handbag photos, automatically turn them into sketches, feed the sketch-photo pairs to a learning program, leave it to train on them, and then embed the result into a web page or app you can use to generate new pictures from sketches. No doubt someone will eventually build one, but in the meantime, the pages above plus "Pix2Pix" by Machine Learning for Artists contain enough information for a reasonably skilled programmer to get started.

And at an even deeper level, one can research into improved learning

programs for fashion design, as in this recent paper: "DeSIGN: Design Inspiration from

Generative Networks" by Othman Sbai, Mohamed Elhoseiny, Antoine

Bordes, Yann LeCun, and Camille Couprie. That requires a deep knowledge of

machine-learning-related things such as loss functions, as well as the

visual language of clothing. But let's return to something simpler, the

handbags. Here are some more of my runs:

It's notable how sensitive the output is to minute changes in input. See how the texture and colour of the right-hand face of bags 1 to 4 change when I add small details to the sketch. Or the way the colouring of bag 5 changes when I add a handle.

Why? Christopher Hesse says that he trained the handbag generator on a database of about 137,000 handbag pictures collected from Amazon. But bags vary hugely in surface detailing: one bag could be made from indigo ruched satin, while another with almost the same outline could be navy viscose/polyamide netted with black lace. A not-too-clever edge detector might output very similar sketches for both. So the mapping from sketch to bag is, as mathematicians like to say, "not well behaved": moving from one point to the next, you feel like a chamois leaping around a million-dimensional version of the Brenner Pass. One infinitesimal step in one direction, and you plummet down a precipice in some other direction that you can't define and never wanted to go.

In addition, the edge detection isn't perfect, so if you sketch a handbag using unbroken even lines, your drawing won't be using the same "notation" that the inputs do.

And, according to a remark by Jack Qiao on https://www.producthunt.com/posts/manga-me:

Pix2pix is great for texture generation but bad at creating structure, like in the photo->map example straight lines and arcs tend to come out looking "liquified".

Here for comparison is a real bag:

an evening bag that I bought from Unicorn to use as

a purse. It

has lots of structure.